February 1, 2023 | Cameron Brown

ChatGPT as a Tool

As a penetration tester, I’ve employed ChatGPT in my workflows since its release to identify its strengths and weaknesses. Having done this, I’ve found that the key role of ChatGPT in penetration testing is as an assistant. During my testing, I’ve used ChatGPT to write scripts, answer questions, and even review source code. The results I saw from ChatGPT made it apparent that, when used as a tool, ChatGPT will greatly increase the efficiency of penetration testing.

For instance, when identifying DNS zone transfers, I was able to get ChatGPT to write a bash script to automate the process entirely (figure 2). ChatGPT is able to automate almost any process used daily in penetration testing by writing scripts in any language necessary. In fact, the limit to what can be produced by ChatGPT in terms of codes and scripts seems to be entirely set by the user requesting the scripts. Although ChatGPT is not perfect, if a user gives a clear prompt with enough detail, they almost always receive a useful response from ChatGPT. However, if a user is expecting some behavior in their script or code, they should specify that. Otherwise, ChatGPT’s results seem to be as close to what is requested as possible. ChatGPT will not optimize code or ensure its security unless this is directly specified by a user.

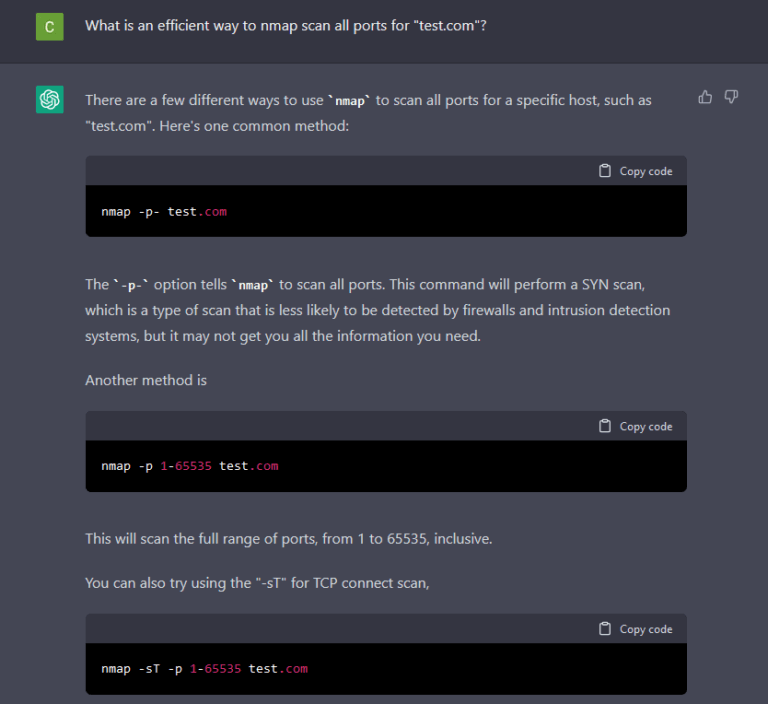

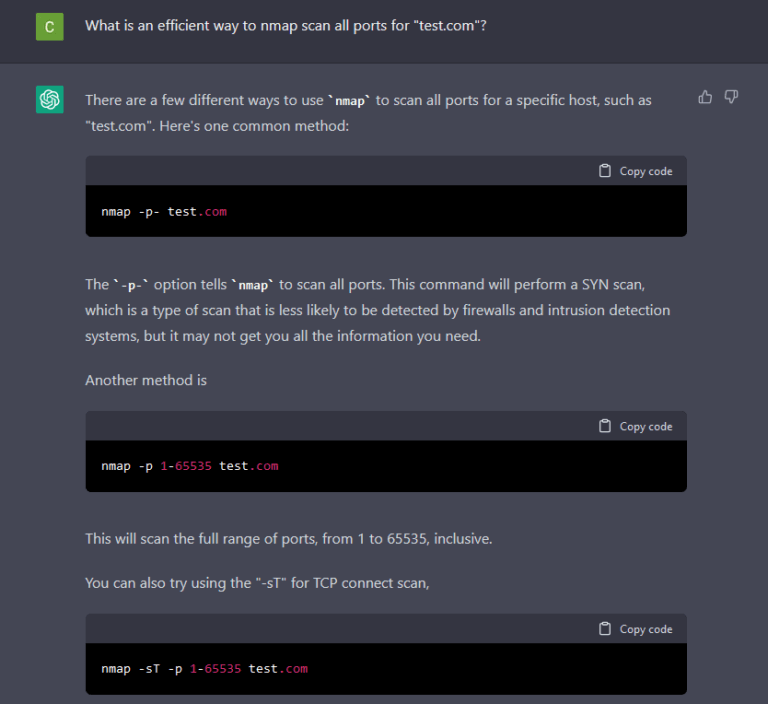

Script and code writing is not the only feature of ChatGPT that we may utilize in penetration testing. ChatGPT is an amazing alternative to Google and can be a conveniently quick source of information. I decided to emulate a student learning NMAP and ask ChatGPT some simple questions about scanning which it handled perfectly (Figure 3 & 4). The only qualm I have with some of ChatGPT’s responses lie in its need for exact detail. ChatGPT is unable to take hints but rather requires specific guidance. When prompting ChatGPT on how to efficiently scan all ports (Figure 3), I hoped it would mention timing modifications the user could make to decrease the intensity of scanning.

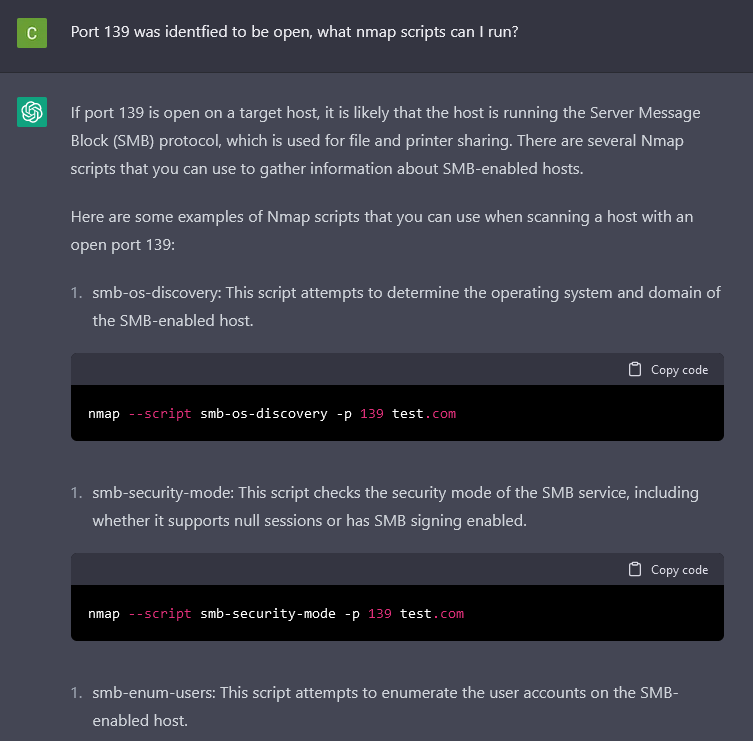

The last test I gave ChatGPT was to review some insecure source code (Figure 5 & 6). Unsurprisingly, this was handled perfectly by ChatGPT. The AI was given two pieces of source code and successfully identified a SQLi vulnerability in the vulnerable piece source code. The other piece which was not vulnerable was correctly identified by ChatGPT to be secure to SQL injection vulnerabilities. This feature is a great starting point for source code review, as ChatGPT will hopefully identify glaring weaknesses in the code. However, ChatGPT’s accuracy cannot be trusted and it should not ever be used to replace manual source code review.

Despite its minor shortcomings, ChatGPT thoroughly surprised me with how much it could factor into the penetration testing process. Going into experimentation with ChatGPT, I expected to find that it would not be able to adequately assist me in my work. After spending more than a month with ChatGPT, I realize now that it is indispensable. ChatGPT cannot replace the work I do but it can entirely remove the need to complete some tedious and menial tasks involved with penetration testing. Its ability to write scripts, evaluate code, and intelligently offer guidance makes it the ultimate assistant for a penetration tester.

ChatGPT for Evil

Despite ChatGPT being regulated by OpenAI, it is still possible to use ChatGPT for malicious purposes. Whether this would be writing malicious code or phishing emails, many users have quickly found out how to bypass ChatGPT restrictions. If a user wishes to use ChatGPT for malicious purposes, they need only to know exactly what they want (Figure 7 & 8).

The focus of these blogs, of course, is ethical hacking, and so we won’t go in depth on how to necessarily use ChatGPT for evil. This does not mean that ChatGPT cannot be used to do these things. With ChatGPT it is possible to bypass filters and create a phishing email to send to customers (Figure 8). It also becomes much easier to create working PoCs for DLL hijacking (Figure 7). Ultimately, it seems what’s true for ethical hacking is true for all hacking with ChatGPT: as long as the user is extremely specific with their input, ChatGPT may be able to do it.

The Future of ChatGPT

Conclusion