By David Grisales Posada

Machine learning has revolutionized threat detection in cybersecurity. These intelligent systems can identify and flag malicious attacks with impressive accuracy, significantly reducing false alarms and strengthening our digital defenses. However, researchers have discovered a critical vulnerability: these systems can be fooled by carefully crafted inputs called adversarial examples.

What Are Adversarial Examples?

Adversarial examples are strategically modified inputs designed to trick machine learning models into making wrong predictions. While this concept was first demonstrated with image classifiers, it has serious implications for security systems that detect malware and cyber threats.

A Simple Example: The Pig That Becomes a Panda

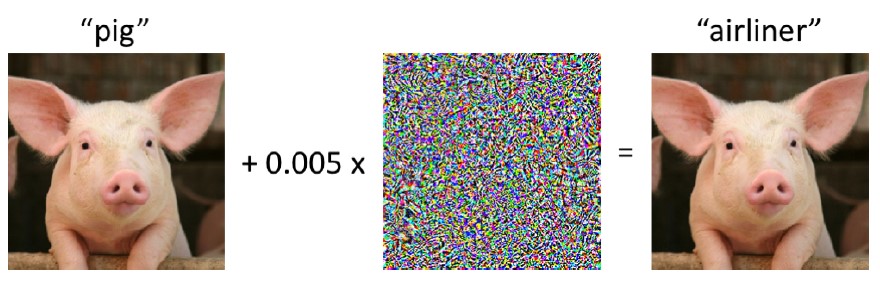

Imagine a neural network that correctly identifies a picture of a pig. This network analyzes the image pixel by pixel through multiple layers of artificial “neurons”, learning to recognize patterns that distinguish pigs from other animals. Through continuous training, it becomes highly accurate at this task.

Here’s where it gets interesting: by making tiny, almost invisible changes to certain pixels—adding what researchers call “noise”—we can trick the network into misidentifying the pig as something completely different, like a panda. To human eyes, the image looks identical, but the neural network is completely fooled.

Taken from: https://wandb.ai/authors/adv-dl/reports/An-Introduction-to-Adversarial-Examples-

in-Deep-Learning–VmlldzoyMTQwODM

The key is precision. The modifications must be carefully calculated to mislead the model while remaining imperceptible to humans.

The Technical Foundation

To achieve this, the noise isn’t random but calculated. Attackers or testers often need to access the model’s parameters and weights to find the most sensitive parts of the input vector. This process frequently leverages gradients (mathematical measures of change) calculated using techniques like backpropagation and the Jacobian matrix. These methods pinpoint exactly which small change will cause the largest misclassification.

Attacking Malware Detection Systems

Multiple research studies have explored how to create adversarial examples specifically for machine learning-based malware detectors. Which is valuable because it helps improve these systems by revealing their weaknesses.

How the Research Works

In these studies, programs and malicious code are represented as binary vectors. Each position in the vector represents a feature—like whether the program uses a specific API call such as “WriteFile”. For example, a vector might look like: [1 0 1 0 0 1], where each 1 or 0 indicates the presence or absence of that feature.

A Critical Constraint: Unlike image pixels that can be altered freely, you can’t just remove features from malware. Removing functionality would break the program entirely, imagine deleting the “WriteFile” capability from malware that needs to write files. Instead, attackers can only add new features by inserting dummy code that triggers these features without affecting the malware’s core functionality.

Two Types of Attacks

Security researchers test these systems using two approaches:

White-Box Attacks: When You Have Full Access

In white-box testing, attackers have complete access to the detection model’s internal structure. This allows them to use powerful techniques to identify the most vulnerable features.

The Attack Process

The attack follows an iterative loop:

- Compute the forward derivative (gradient).

- Identify the most sensitive feature for the current input vector.

- Change that feature from zero to one (i.e., add a new API call).

- Repeat the process a set number of times (e.g., 20 iterations) to minimize the changes.

Researchers may limit the iterations to keep the modifications minimal and realistic.

Real-World Results

Tests against various neural networks using the DREBIN dataset (which contains 123,453 benign Android apps and 5,560 malicious ones) with a 20-iteration limit produced striking results. The misclassification rate jumped from 40% to 84%—meaning a vast number of malicious apps were incorrectly labeled as safe.

Black-Box Attacks: The Realistic Scenario

In reality, attackers rarely have access to a detection system’s internal workings. This is called a black-box scenario, and it’s much more challenging. Traditional gradient-based methods won’t work here, but researchers have found a solution using cutting-edge AI technology: Generative Adversarial Networks (GANs).

Understanding GANs

GANs consist of two neural networks engaged in a continuous competition:

- The Generator: Creates fake examples trying to look authentic

- The Discriminator: Attempts to distinguish between real and fake examples

The process works like this:

- The generator creates synthetic examples and sends them to the discriminator

- The discriminator examines both these synthetic examples and real ones, learning to spot the differences

- The generator studies the discriminator’s parameters and adjusts its strategy to become more convincing

- This cycle repeats until the generator becomes highly effective at creating realistic fakes

Enter MalGAN: Weaponizing GANs for Black-Box Attacks

MalGAN adapts the GAN framework specifically for attacking malware detection systems. Here’s how it works:

The Generator’s Role: Creates adversarial malware examples by adding features to the original malicious code. It uses an “OR” operation, which means it only adds new features—never removes existing ones, preserving the malware’s functionality.

The Discriminator’s Role: Acts as a substitute for the real black-box detector. The discriminator receives feedback from the actual black-box system (which labels programs as benign or malicious) and learns to mimic its behavior. Since we have access to the discriminator’s parameters, we can extract gradient information similar to what the black-box detector would use.

The Clever Part: The generator automatically adjusts its adversarial examples based on the discriminator’s parameters. Even though we can’t see inside the black-box detector, we can reverse-engineer its behavior through this proxy.

Feature Extraction Strategy

To make this work, researchers need to identify which features the black-box detector is sensitive to. A simple technique: take a benign program and systematically change individual features. When it suddenly gets flagged as malware, you’ve found a feature the detector cares about.

Training MalGAN

The training process requires two datasets: benign programs and known malware samples. Here’s the training cycle:

- Take a batch of malware samples

- Generate adversarial versions using the generator

- Take a batch of benign programs

- Feed both sets to the black-box detector for labeling

- Update the discriminator based on the black-box detector’s feedback

- Update the generator to better fool the discriminator

- Repeat until achieving satisfactory results

The Results Are Alarming

Researchers tested MalGAN against multiple detection algorithms including Random Forest, Logistic Regression, Decision Trees, and others. Using a dataset of 180,000 programs (30% malicious) with 160-dimensional feature vectors, the results were striking:

MalGAN reduced detection rates from normal levels to nearly 0% across multiple machine learning models. In some cases, 100% of adversarial malware examples successfully bypassed detection.

The Retraining Problem

Defenders can retrain their models using adversarial examples to improve detection. However, the research revealed a troubling pattern:

Even after retraining the detector on adversarial examples, attackers could generate new adversarial examples that achieved similar results. This creates a never-ending cat-and-mouse game where defenders are always one step behind.

The Bottom Line

These adversarial techniques are powerful tools. They show how ML-based detection systems can be bypassed using strategically crafted inputs, whether through precise gradient calculations or intelligent AI-based attacks like MalGANs.

These methods—White Box, MalGANs, and their variations (such as the resource-saving n-gram MalGAN)—form the foundation of offensive and defensive research, pushing the boundaries of what machine learning can achieve in the security space.

Main Sources:

- Grosse, K., Papernot, N., Manoharan, P., Backes, M., & McDaniel, P. (2016). Adversarial Perturbations Against Deep Neural Networks for Malware Classification. arXiv preprint arXiv:1606.04435.

- Hu, W., & Tan, Y. (2017). Generating Adversarial Malware Examples for Black-Box Attacks Based on GAN. arXiv preprint arXiv:1702.05983.

- Payong, A. (2024, December 31). Understanding adversarial attacks using fast gradient sign method. DigitalOcean. https://www.digitalocean.com/community/tutorials/fast-gradient-sign-method